Upcoming JavaScript Features: Simplifying Array Combinations with Array.zip and Array.zipKeyed

- Development

Adam Smith Adam Smith

October 14, 2024 • 6 min read

At Beta Acid, we've been experimenting with integrating Large Language Models (LLMs) into our workflows for over two years now. For our engineering team, GitHub Copilot has been a productivity enhancer, but we've recently started noticing some persistent flaws:

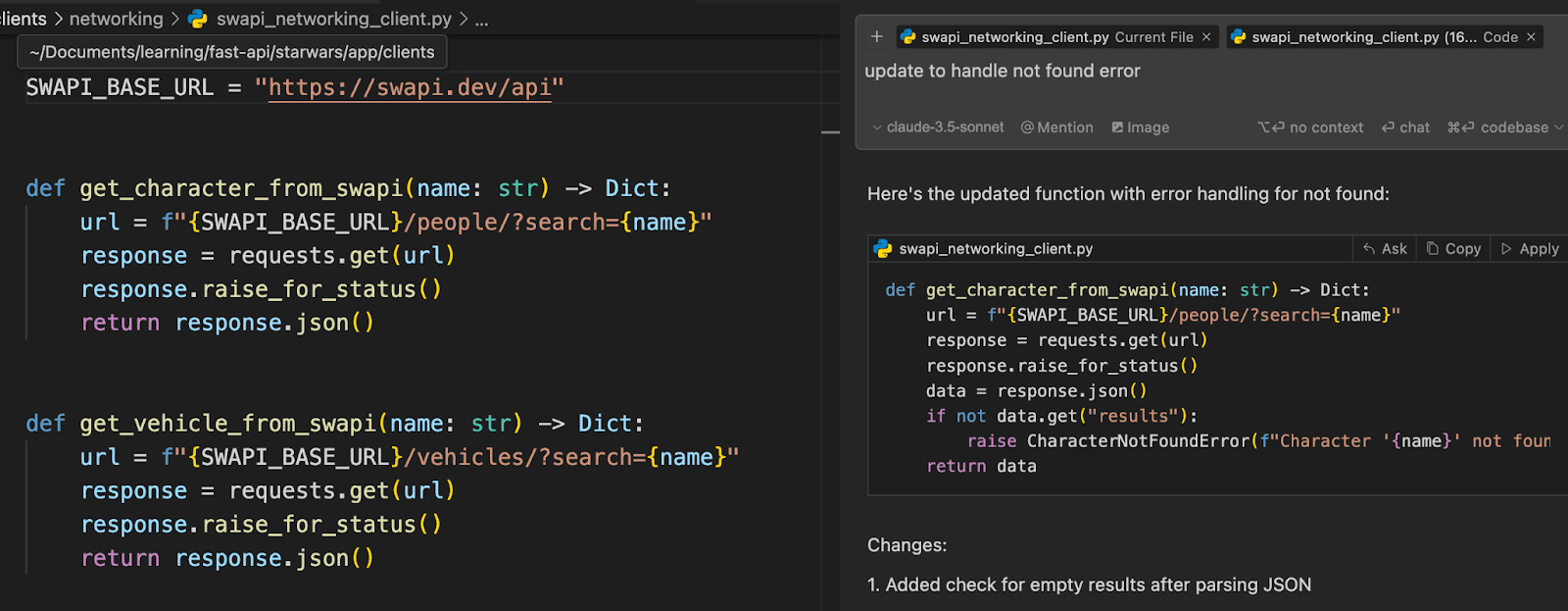

A few months ago we began exploring new tools that better integrate into our development workflow. One tool that stood out is Cursor, a fork of VS Code that integrates LLM features throughout the IDE. What sets Cursor apart is its deep integration with context from your codebase, which dramatically improves code suggestions and completion accuracy.

Cursor’s ability to leverage context is its strongest feature. It pulls context directly from your codebase by indexing files and directories, which allows it to understand your project’s structure and dependencies. In addition to this automatic indexing, you can give Cursor additional context by using specific references in chat, such as pointing to files, code snippets, or external documentation.

Here are the main types of context that Cursor uses to improve your workflow:

@files and @code: Use @files and @code to refer directly to specific files or blocks of code within your project. This ensures that Cursor can pull in relevant snippets or structural elements from your existing codebase.@docs: Calling @docs allows you to reference external documentation that Cursor can use to better understand your external dependencies. This is especially useful for libraries that change frequently and the LLM might have been trained on outdated material.@codebase: Use the @codebase symbol to reference your entire codebase contextually in chat. This is useful for tasks that require a broader understanding of your entire project, such as ensuring code consistency across different modules or suggesting improvements based on the overall architecture.Let’s walk through how Cursor can help us enhance our FastAPI Reference App, which calls the Star Wars API and demonstrates best practices in FastAPI development.

Rather than relying on Cursor’s Composer feature (which generates an entire feature at once), use the Chat feature to tackle smaller, more manageable tasks. You can refine your instructions within the chat and apply them to your file step-by-step. Taking it slow—one small chunk of code at a time—will help you better understand the changes and avoid unwanted behavior.

Letting Cursor build entire features looks great in marketing demos, but is a recipe for trouble in professional development.

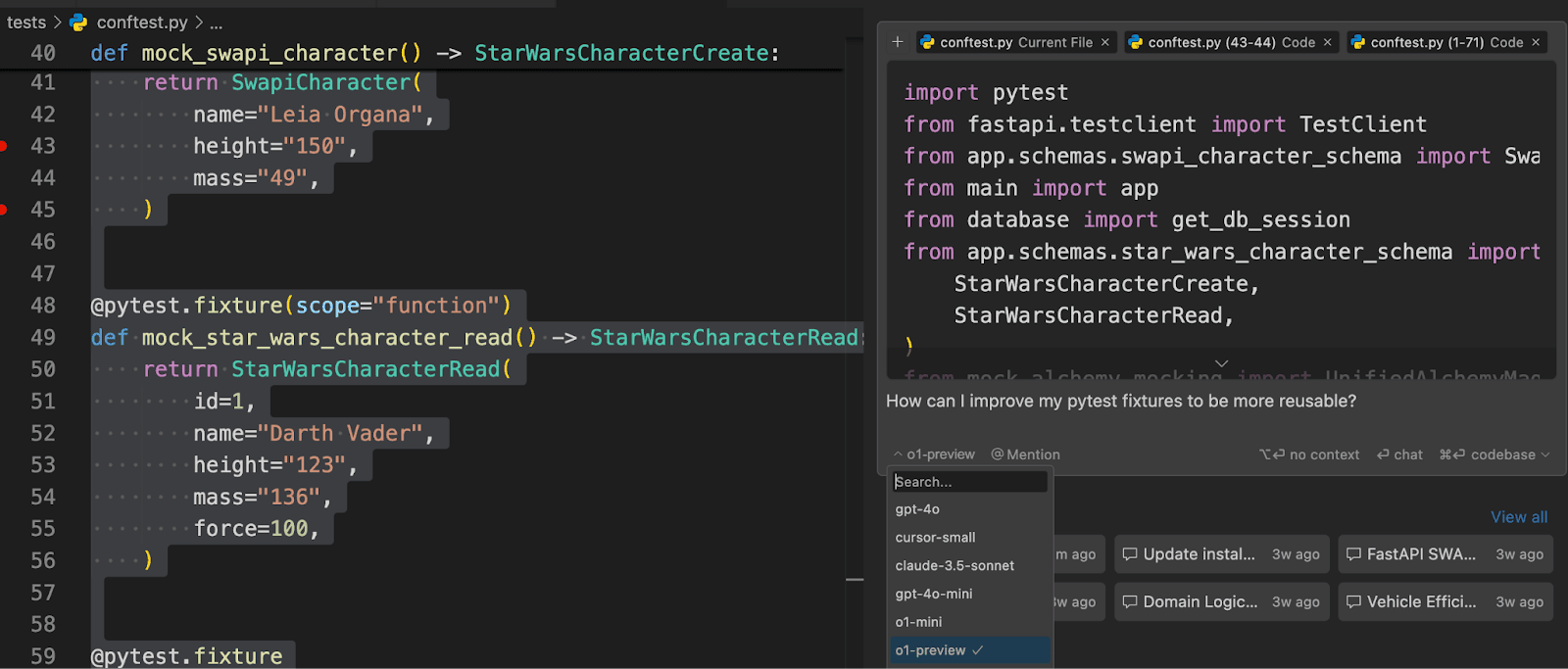

For day-to-day code generation and chat interactions, we recommend using Anthropic’s Claude 3.5 Sonnet. It provides the best balance between quality, cost, and speed for routine development. For more in-depth architectural planning, enable usage-based pricing to utilize Open AI’s o1 model. It costs $0.40 per request but provides great results.

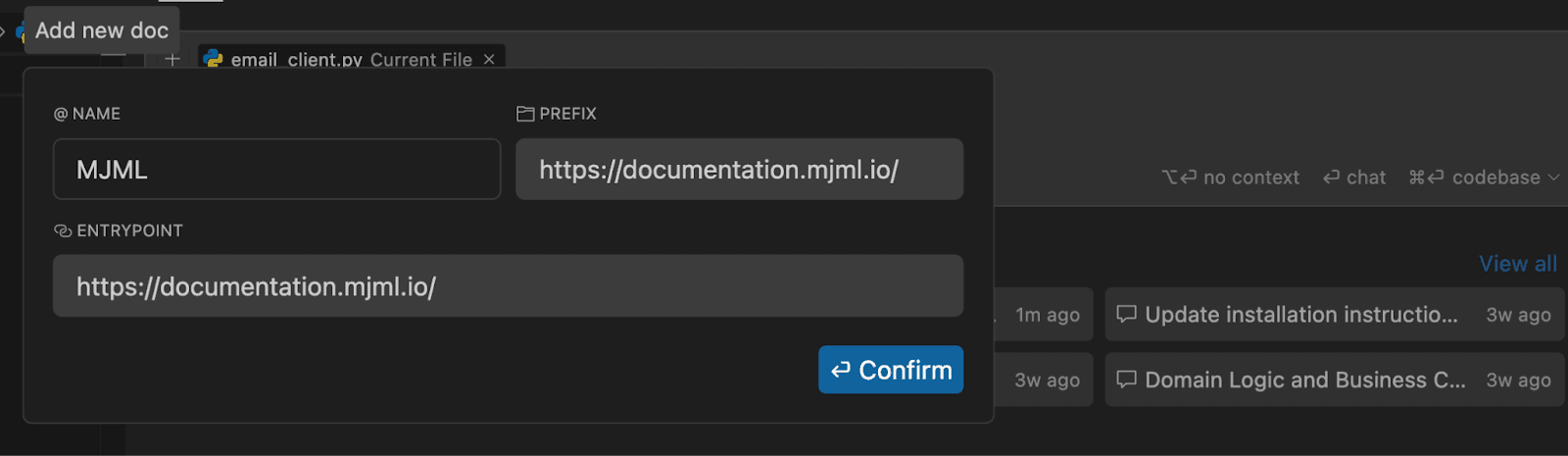

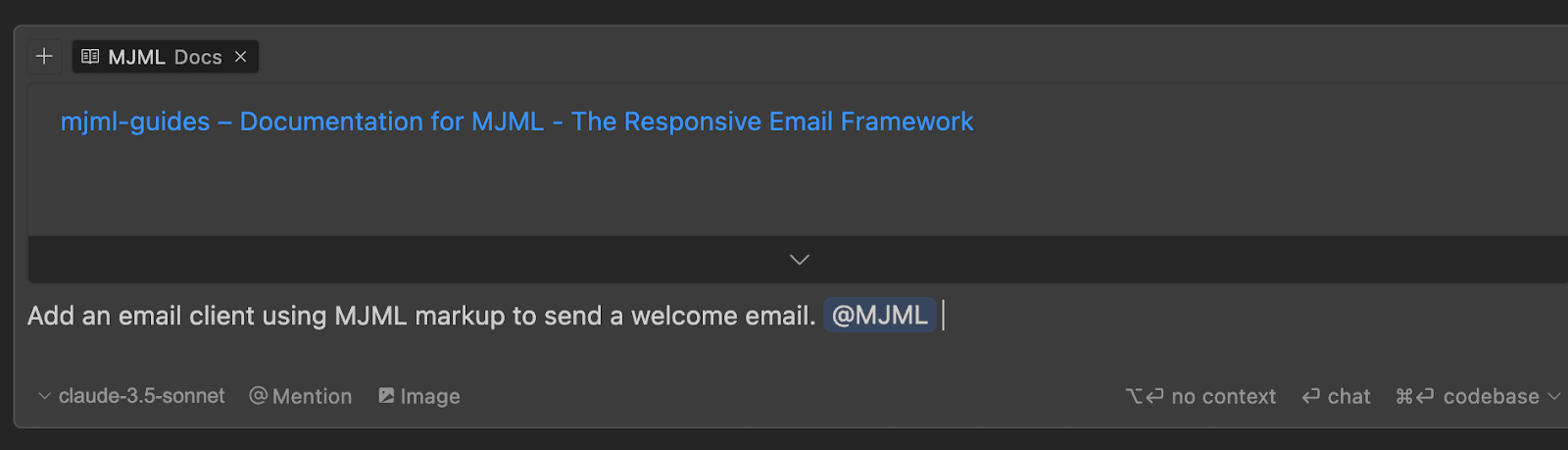

If the documentation you need isn’t available by default in Cursor you can add it manually and Cursor will scrape and index it for future use. For example, Cursor doesn’t include MJML docs out of the box, but you can have Cursor index the docs to improve code suggestions. Just press CMD+L, type @Docs, select "Add new doc", and provide the URL for the documentation. Cursor will scrape and index the content, allowing you to reference it in chat using @docs.

.cursorignore File to Skip Unnecessary FilesYou can use a .cursorignore file to tell Cursor to ignore specific files or directories when using the @codebase command in chat. This prevents Cursor from pulling in irrelevant parts of your project, helping it focus on the files that matter most and providing cleaner, more relevant suggestions.

.cursorrulesUse a .cursorrules file to standardize how Cursor interacts with your project. This file provides context for each request you make, ensuring consistent code styles and preferences across your team. Sharing these rules helps maintain a cohesive workflow. For inspiration, check out this curated list of .cursorrules files that can be adapted to your team's needs.

When asking Cursor to generate code, provide as much relevant context as possible. For instance, in our reference app we’ve already build a characters endpoint, and now we want to add a new route to manage starships:

/starships route that retrieves a list of starships added in the last 24 hours. Use the existing CharacterService and follow the patterns from the /characters endpoint. Implement pagination to limit the results per page, include sorting by creation date, and ensure error handling for when no starships are found. Add validation to check that the created_at field is within the past 24 hours, and update the router to include the new route."

Providing this level of detail will help you get 80% of the way to a working endpoint. Vague prompts like “Create a starships route” won’t follow your existing project structure.

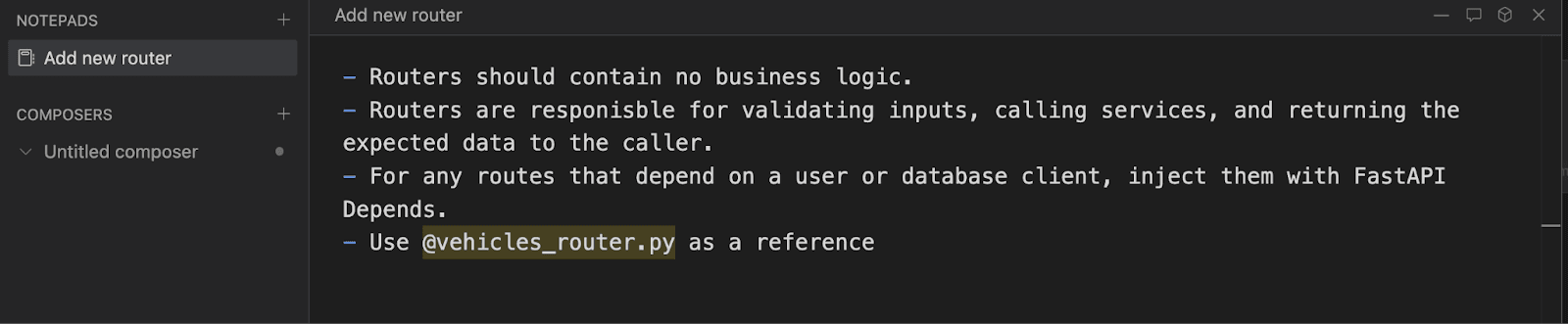

If you’re frequently asking Cursor to perform similar tasks, the Notepads feature can streamline your workflow. For example, create a Notepad for “AddingNewRoute” in your Star Wars API. This could include common patterns like router structures, service layers, and database calls.

To set up a Notepad, go to Composer > Control Panel > Add Notepad, and store relevant code snippets and file references. When you’re ready, simply reference it in chat using @notepad for consistency across tasks.

After receiving multiple accurate results from Cursor, it can be tempting to start accepting suggestions without a thorough review. This is a mistake. LLMs can still introduce bugs and hallucinate, particularly when refactoring or suggesting code changes.

At Beta Acid, we’re always looking for ways to boost productivity, and while Cursor is a great tool, it’s not magic—it’s simply a smarter interface for working with LLMs. It helps streamline workflows, but ultimately, it's you, the developer, driving the results. Use Cursor’s features to your advantage, but stay grounded—thoughtful engineering always comes first.